It’s fascinating to watch a computer recognize a cat in a photo—something simple for us but complex for machines. Before Convolutional Neural Networks (CNNs), image processing was cumbersome, requiring engineers to manually define features and create complex systems. CNNs changed this by allowing machines to learn patterns automatically.

Mimicking the human brain’s approach to vision, CNNs use layers to detect simple to complex patterns. Today, CNNs power facial recognition, self-driving cars, and medical diagnostics, offering efficient, consistent solutions without constant oversight—proving that sometimes, simplicity in design leads to remarkable outcomes.

Understanding CNNs doesn’t mean you need a PhD. Their structure is actually intuitive if you picture it like an assembly line that processes images. Each layer in a CNN has a job. It looks at the input, makes sense of something specific, and passes it on.

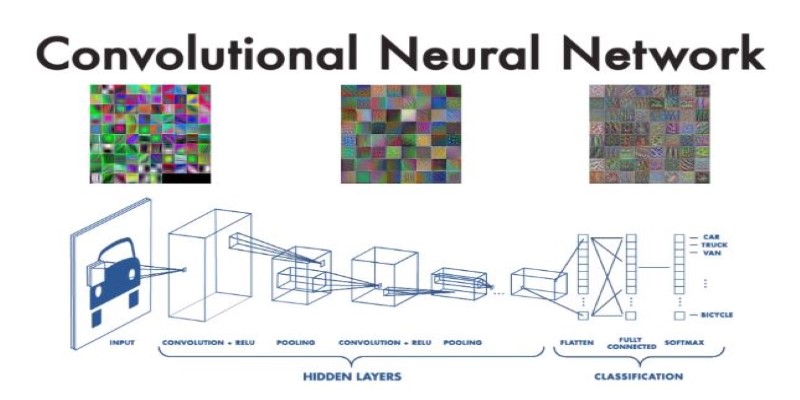

It starts with convolutional layers, which act like pattern detectors. A small grid (called a kernel or filter) slides over the image and checks for features like edges, corners, or textures. Every time it finds something interesting, it records it in a new representation—a feature map.

Then comes ReLU, a simple operation that introduces non-linearity. Think of it as filtering out unnecessary noise and keeping only the valuable insights. Without this step, a CNN wouldn’t be able to recognize anything beyond linear relationships.

After that, pooling layers step in. Their job is to reduce the size of the feature maps. Imagine zooming out and summarizing the image by focusing on the most dominant features in small sections. This makes the model faster and more efficient while still preserving the essential parts.

By the time we reach the fully connected layers, all these extracted features are flattened into a vector. The system now connects these dots and makes predictions—whether that’s identifying a car, a tumor, or a handwritten digit. These layers are where decisions are made.

What's remarkable is how each stage learns automatically. You feed CNN hundreds or thousands of labeled images—let's say for image classification—and over time, it adjusts itself to recognize what matters. It doesn't need to be told that whiskers matter when identifying cats; it learns that by trial and error.

This learning is powered by backpropagation, a process that fine-tunes the model by minimizing the difference between its predictions and actual results. It's a constant cycle of guessing, checking, and updating, which improves the model after every iteration.

When you look closely, you will see that CNNs are practically everywhere. If you unlock your phone with your face, CNNs were behind that decision. They identified the key features of your face, like the distance between your eyes or the shape of your jawline, and matched it to stored data.

CNNs have become the gold standard in image classification. The power tools that categorize photos on your phone detect spam images on social platforms and even help astronomers tag galaxies in space imagery. CNNs have also brought a quiet revolution in agriculture—classifying plant diseases from photos, reducing crop loss, and guiding treatment.

The medical field has embraced them, too. From detecting skin cancer in dermatology images to analyzing chest X-rays, CNNs offer a second set of eyes—ones that won't tire or overlook subtle patterns. And unlike early attempts at AI in healthcare, CNNs perform with precision, given the right data.

In autonomous vehicles, CNNs interpret the surroundings of a car—recognizing pedestrians, reading traffic signs, and understanding lane markings. Here, real-time accuracy matters, and CNNs, with their ability to process data fast and in-depth, offer that reliability.

Security systems use CNNs for surveillance, filtering real threats from harmless background activity. Industrial companies use them for quality control—scanning thousands of products a minute to catch defects. These aren’t flashy use cases, but they’re vital to efficiency.

CNNs play a role even in creative fields. Art generation, photo enhancement, and automatic tagging systems all lean on CNN's pattern-recognizing power. The same architecture that spots a zebra in the wild can also sharpen the colors of a vintage photo or recommend filters based on subject content.

For all their success, Convolutional Neural Networks aren’t the final form of vision-based AI. But they’ve laid the foundation. In many ways, they taught us that machines can see—not just record or replicate, but really see patterns and understand visual context.

Researchers are now building hybrid models—combinations of CNNs with transformers or recurrent networks—to handle not just images but sequences of frames, live video feeds, or multimodal inputs like audio with video. CNNs still form the backbone of these models, serving as the first filter for visual information.

Another direction is explainability. While CNNs work, they've often been seen as black boxes. New techniques help visualize which parts of an image influence a model's decision, offering transparency in fields like medicine or law enforcement, where trust matters.

We’re also seeing efforts to shrink CNN models for edge devices. Think of smart cameras or wearable tech that needs image processing but can’t afford huge cloud processing costs. Lighter CNNs are being designed to operate with fewer resources, all while maintaining decent performance.

The combination of efficiency and accuracy keeps CNNs relevant. They're not trendy anymore, but they're dependable. They don't make headlines like some newer AI models, but behind most vision systems that work, a CNN is doing its job quietly and effectively.

While some advancements aim to replace CNNs, many simply build on what they've already achieved. That's the mark of a solid foundation—one that can adapt, evolve, and remain useful even as the landscape changes.

Convolutional Neural Networks (CNNs) have revolutionized image processing by enabling machines to learn and interpret visual data autonomously. Their ability to detect patterns and classify images with high accuracy has made them indispensable in various fields, from healthcare to autonomous vehicles. While advancements continue, CNNs remain the backbone of many modern vision-based systems, providing reliable and efficient performance. Their adaptability ensures they will continue to play a crucial role in shaping the future of AI-driven image recognition.

Job displacement due to AI is reshaping industries and careers worldwide. Learn the key risks involved and explore practical solutions to navigate the future of work

From predicting customer preferences to improving supply chain management, AI has transformed the retail industry in various ways

Refine your brand strategy with ChatGPT by clarifying your messaging, aligning your voice, and adapting in real time. Make your brand consistent and compelling

How Convolutional Neural Networks are transforming image processing across industries, enabling machines to interpret visuals with precision and speed

AI for Smart Cities is transforming urban living by improving traffic and energy management systems. Discover how smart technologies are making cities more efficient and sustainable

Investigate why your company might not be best suited for deep learning. Discover data requirements, expenses, and complexity

SageMaker Unified Studio AWS creates one unified environment connecting analytics and AI development processes for easy data management, data governance, and generative AI workflow operations.

Learn how AI can transform content creation with these 8 impactful blog post examples to enhance your writing process.

You can follow blogs and websites mentioned here to stay informed about AI advancements and receive the latest AI news daily

Find why 2025 is the perfect year to leverage JavaScript for ML, with real-time apps, edge computing, and cost-saving benefits

Discover 7 free and paid LLMs to enhance productivity, automate tasks, and simplify your daily personal or work life.

Get a clear understanding of Strong AI vs. Weak AI, including how Artificial General Intelligence differs from task-specific systems and why it matters in today’s tech-driven world