Artificial Intelligence feels like magic—machines recognize faces, drive cars, and answer questions in seconds. But behind all this brilliance hides a serious problem most people never see: AI security risks. These risks don't come from typical hackers breaking into systems. Instead, they come from something far more subtle: adversarial attacks.

These attacks fool AI models into making bad decisions using small, invisible tricks. A stop sign becomes a speed sign. A safe system becomes vulnerable. In a world racing toward automation, understanding these hidden threats isn’t just smart — it’s necessary for keeping technology safe and trustworthy.

AI security risks aren’t your average tech problem. They don’t come knocking on the front door like stolen passwords or obvious hacking attempts. Instead, they slip quietly into the very mind of the machine — right where it learns, decides, and reacts. AI systems depend on patterns and data to function. But here’s the catch — if attackers can feed those systems bad patterns or sneaky data, the AI doesn’t just make a small mistake — it completely misjudges reality.

Picture this: a company uses facial recognition to control building access. It feels secure until someone figures out how to fool the system into seeing a stranger as an authorized employee. There are no alarms or alerts—just easy access. That's not science fiction—that's happening right now.

The scary part? These attacks leave no trace. To us, it's a photo of a cat. However, with some changes that are not visible to the AI, an attacker can make it believe that it's a toaster or a tree. It's like observing a magician deceive the smartest person in the room.

What makes AI security risks so dangerous is simple—they take AI's biggest talent, recognize patterns, and turn them into vulnerabilities. Most people won't see this coming until it's too late.

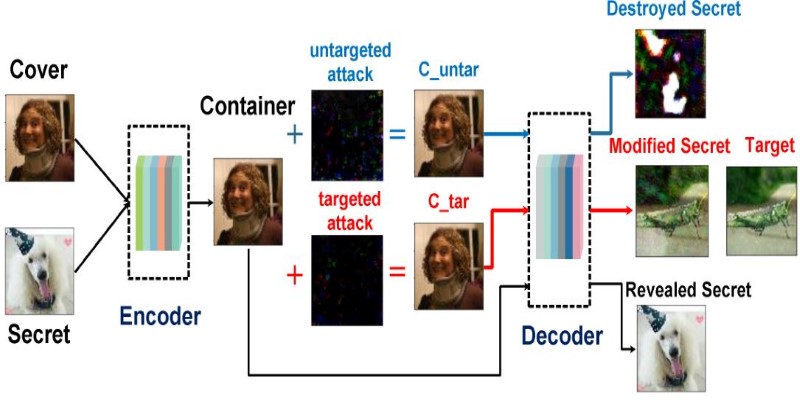

Adversarial attacks are like mind games for machines. They use tiny changes in input data — changes that humans barely notice — to completely confuse AI systems. The goal is simple: break the trust between what we see and what AI believes.

Let’s say you’re driving a smart car. You come up to a stop sign. To your eyes, it’s clear as day. But if an attacker has placed special stickers or marks on the sign — ones carefully designed to fool the car’s AI — it might read it as a speed limit sign instead. That could lead to a dangerous situation.

This is how adversarial attacks work in practice — adding digital noise to images, tweaking audio commands, or manipulating text data so that AI misinterprets the message. The scariest part? Adversarial attacks are evolving fast. Hackers are always testing new ways to stay one step ahead of security measures.

These attacks aren't limited to cars and cameras. Adversarial attacks are being tested against medical systems, financial fraud detection systems, voice assistants, and even military drones. If AI is running it, there's someone out there trying to fool it.

The damage caused by adversarial attacks isn’t just about embarrassing mistakes or technical glitches. We’re talking about real-world consequences that affect safety, security, and even human lives.

In healthcare, an AI system reading medical images could be tricked into missing a tumor or misdiagnosing a condition. In finance, fraud detection tools could be bypassed by carefully manipulated data, costing companies millions. In smart homes, voice assistants could be tricked into opening doors, transferring money, or revealing sensitive information.

Perhaps most alarming is the risk to self-driving cars. A single misread traffic sign or invisible road hazard could lead to accidents. The more we rely on AI for important decisions, the higher the stakes become when those systems are attacked.

AI security risks are a ticking clock. It’s not a question of “if” attackers will try these tricks — it’s already happening. The real question is: Are we prepared to defend against them?

The good news? AI security is not standing still. Developers and researchers are already building defenses to keep these attacks at bay. But like any good security strategy, there’s no single magic solution.

One powerful method is adversarial training. This means exposing AI systems to adversarial attacks during their learning phase and teaching them to recognize these tricks before they encounter them in the real world. It's like giving the AI practice rounds so it knows what to watch for.

Another smart defense is input sanitization. This technique checks and cleans all incoming data before the AI processes it. If anything looks suspicious, the system either corrects it or rejects it altogether. It’s a digital version of checking someone’s ID before letting them into a secure area.

Explainable AI is another breakthrough in defense. These models provide clear reasons for their decisions, helping developers and security teams understand if something unusual is happening. If an AI system suddenly gives an odd result, teams can investigate why — and fix the problem fast.

Of course, defenses must evolve alongside attacks. Hackers are creative, and so security teams must stay ahead of the game by constantly updating their models, testing for vulnerabilities, and sharing knowledge across industries.

AI security risks are no longer just a future concern — they are a present-day challenge. Adversarial attacks show how vulnerable AI systems can be when exposed to carefully crafted threats. These attacks can mislead smart systems in critical industries like healthcare, finance, and transportation, leading to real-world dangers. Defending against these risks requires proactive strategies like adversarial training, input sanitization, and explainable AI models. As AI continues to shape modern life, building stronger, more resilient systems is essential. Staying ahead of adversarial attacks is not optional — it’s the key to securing the future of artificial intelligence.

Find out the key differences between symbolic AI vs. subsymbolic AI, their real-world roles, and how both approaches shape the future of artificial intelligence

Job displacement due to AI is reshaping industries and careers worldwide. Learn the key risks involved and explore practical solutions to navigate the future of work

How computer vision enables machines to detect and classify objects in real-world scenarios. Learn how this technology works and where it’s headed next

AI for Smart Cities is transforming urban living by improving traffic and energy management systems. Discover how smart technologies are making cities more efficient and sustainable

Discover 7 free and paid LLMs to enhance productivity, automate tasks, and simplify your daily personal or work life.

AI security risks are rising, with adversarial attacks targeting machine learning models. Learn how these attacks work and what steps can protect AI systems from growing security threats

best YouTube channels, StatQuest with Josh Starmer, channels focused on applied statistics

Neuromorphic computing is transforming artificial intelligence by mimicking the human brain. Learn how AI inspired by the human brain is leading to smarter and more energy-efficient technology

Discover 20+ key content marketing statistics from 2024 to refine your 2025 strategy and boost engagement, ROI, and conversions

From predicting customer preferences to improving supply chain management, AI has transformed the retail industry in various ways

Learn how AI can transform content creation with these 8 impactful blog post examples to enhance your writing process.

AI in Mental Health is transforming emotional care through therapy bots and emotional analysis tools. Discover how AI provides support, monitors emotions, and improves mental well-being